This article is one part of a longer deep dive into Leios, Cardano’s proposed consensus upgrade. The full post is available at https://314pool.com/post/leios. We’ve broken it into smaller, standalone reads to make it more accessible for the Cardano Cube audience.

And now we come to the main topic that inspired me to write this blog: how to deal with conflicting transactions. This topic is the last major design hurdle remaining for Leios, and while we have multiple potential designs, they each involve sensitive tradeoffs that ultimately will be the broader Cardano community’s responsibility to decide on. We have several proposed designs that we’re fairly happy with on different dimensions, which I’ll outline, but it’s likely to be a contentious topic. Because of that, it’s very important that we approach the conversation with a thorough understanding of exactly what is, and what isn’t, possible and being proposed.

Here is a series of logical propositions that appear to be true, and create a fundamental tension in the Leios design:

- Any sufficiently high throughput design must eventually begin making concurrent decisions about which transactions to include

- Given that those decisions occur outside of each other’s light cones, there will be cases where those decisions conflict

- The optimistic, conflicting decisions must be resolved eventually

- Given the high throughput, each of those decisions, or the resolution, will involve a significant amount of computation

- Any nontrivial computation likely needs to be compensated by the network in some way, to ensure people will bother to do it

- An adversary that can leverage or amplify any un-compensated work can cheaply deny service to other users

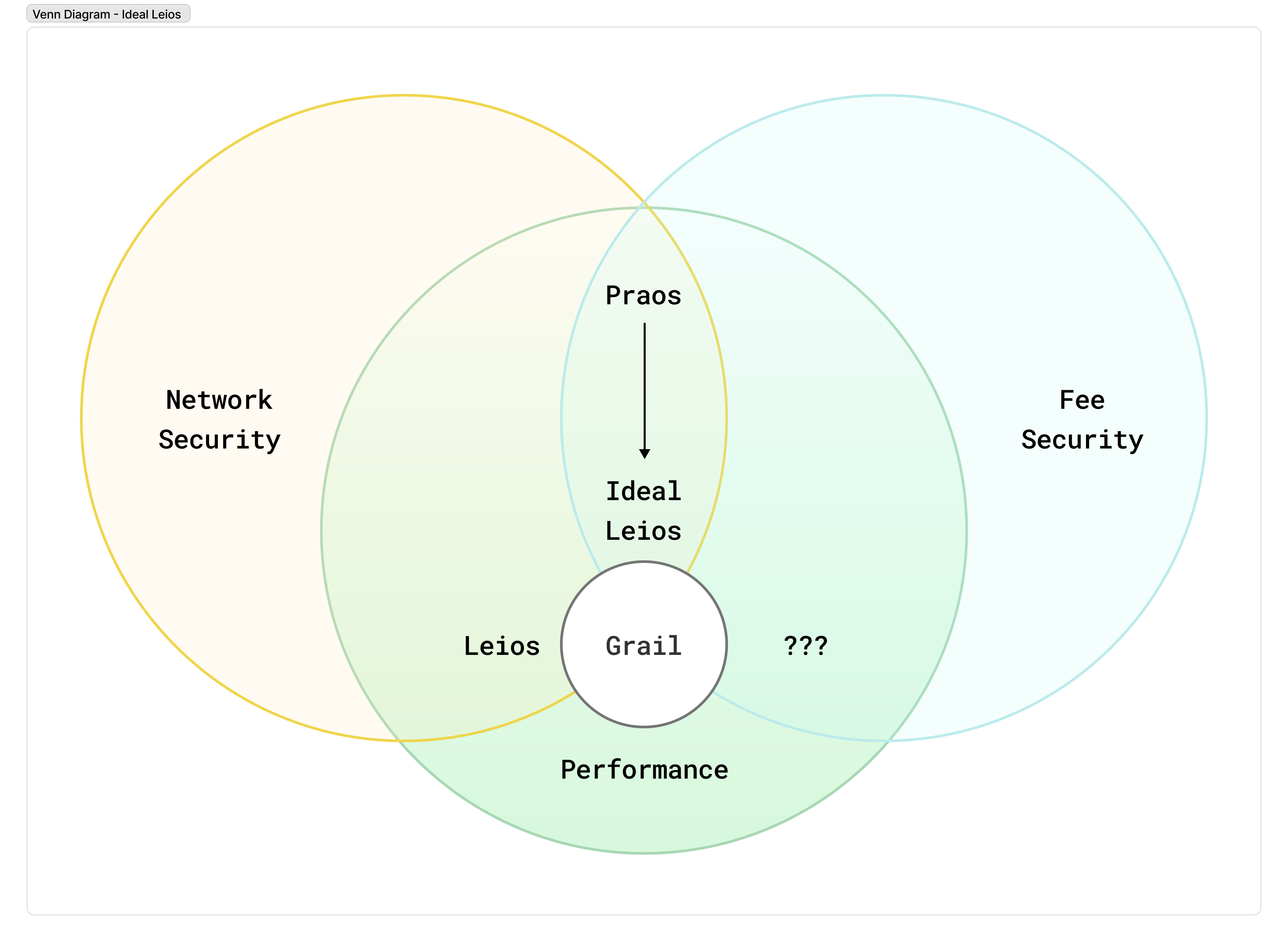

The above reasoning is carefully kept very generalized: it’s not about any one particular design. What this means is that seemingly any design we settle on for Leios that has sufficiently high throughput will create tension between the following properties:

- Network Security - If a transaction incurs significant computation on the network or is persisted into the permanent state of the chain, some user pays a fee to cover this cost.

- Fee Security - A user pays a predictable fee associated with their transaction if and only if it is applied as intended to the ledger (and thus does not conflict with other transactions).

- Performance - Transactions are produced concurrently and without coordination, and reach high confidence of inclusion in the final chain in a short period of time.

In the above chart, Fee Security and Network security are properties that a protocol can have; and performance is a gradient, which we want to optimize as much as we can within those constraints.

Optimizing for any two of these properties too thoroughly seems to make the third difficult. Praos optimizes for Network Security and Fee Security, by having a long enough quiet period between blocks so that decisions about which transactions to include are not produced concurrently too frequently. Leios attempts to optimize for Network Security and Performance; As described so far, Leios would likely need to sacrifice fee security. And so, we should examine this tradeoff, to identify if there’s a more comfortable point in the design space that gives us both properties, while still giving us the performance gains of Leios. (Note: we find it hard to imagine someone choosing to optimize for Fee Security and Performance, so we have no examples to give of a protocol that sacrifices Network Security!)

Determinism

This property is often called “transaction determinism” on Cardano. That is, a user is able to know two things:1) Their transaction is either completely applied to the ledger, or not at all.2) If it is applied, they can compute exactly the delta that is applied to the ledger state (including the fee they will be charged).

Note: Some people take umbrage with this term; They assert that ALL blockchains must be deterministic because given the same inputs, every block producer must arrive at the same result. However, it’s my opinion that this isn’t a useful distinction or definition to make. If a definition applies to everything or nothing, then it serves no purpose in the language: it doesn’t allow you to meaningfully differentiate two different cases. I personally prefer to assign a useful definition to “deterministic”, laid out above. A transaction on Ethereum, for example, is not deterministic from the users’ perspective, specifically because they cannot know all of the inputs to their transaction, and so its behavior is, to varying degrees, indeterminate. Regardless of what label you want to apply to it, however, what’s important is that you know that when I say transaction determinism in this article, that is what I mean.

Within Cardano, we are quite proud of this feature. It leads to a wonderful improvement in user experience because the alternative is painful and expensive. On Ethereum, for example, during periods of high load or volatility especially, users can get caught in a loop attempting to submit a transaction and having it fail, charging them for the work that has been done evaluating the smart contracts. Sometimes this fee can be in the tens of dollars for each failed transaction.

How does Praos achieve this today?

First, the designers of Praos have gone to great lengths to separate the work done to validate a transaction into two categories. One of those categories is the computation that is likely to be very and unpredictably expensive: running smart contracts. The other category is those that can be made incredibly cheap. We call these “Phase 2” and “Phase 1” validation rules, respectively.

The genius here is that all sources of non-determinism only show up in, and change the result of, phase 1 validation. This means that a node only ever has to run the scripts for a transaction once.

For example, smart contracts cannot view the current slot: the exact slot that a transaction gets included in isn’t known at the time you build the transaction, because we don’t know what the leadership schedule will be. However, you can set an upper and lower bound on the slots that a transaction is allowed to appear in, and those bounds are exposed to the smart contracts. The bounds will never change, so as time passes, you don’t need to re-run the script with a new slot number. On the other hand, the upper bound on a transaction can pass, making the transaction no longer valid. However, in that case, “revalidating” this condition is as simple as comparing two integers.

This cost is so low that a node is likely to do it for free, and an adversary is unlikely to be able to cheaply overwhelm a node with validity range checks.

Another example is checking whether each transaction input has been spent or not.

In the best case, a node validates the smart contracts of a transaction as it receives it and places it in the mempool, and then includes it in the next block it forges, or cheaply evicts the transaction from the mempool when a new block comes in, and the node only ever evaluated the scripts once.

In the worst case, a node receives a transaction, validates the scripts, and places it in the mempool. Then, they observe a block that conflicts with that transaction, validate it (including running the scripts), and evict the conflicting transaction from its mempool. The validation for the transaction in the mempool was “wasted”, but this is bounded to at most two validations. An adversary cannot amplify this wasted effort arbitrarily for four reasons:

- Two conflicting transactions will never be placed in the same mempool, meaning that each block with a conflicting transaction only invalidates a bounded amount of work.

- Transactions are not placed back into the mempool when switching forks, meaning those transactions can only represent wasted work once.

- The average time between blocks is large, meaning nodes aren’t CPU-bound. There is a huge gulf of space between blocks (on average) to absorb that extra computation.

- CPU usually isn’t metered: you pay for capacity, and so long as that capacity isn’t filled, the cost to the node operator is the same.

In Leios, however, the above isn’t necessarily true:

- The high rate of IB production means that a transaction is likely to be included in a block before the gossip protocol can alert you to a potentially conflicting transaction.

- The higher throughput means that there’s more computation to fill up that capacity and thus less forgiveness of wasted effort.

- The pipelined nature of the protocol also means there are fewer quiet periods that can absorb this work.

This means that Leios is more sensitive to an adversary creating and propagating these conflicting transactions to different parts of the network. It becomes more essential to charge for this work to prevent adversarial behavior, or at least to make it costly for such an adversary.

Does this mean that Leios is going to force us to give up our beloved “transaction determinism”? At this time, while we believe that Leios will require some “softening” of this property, we have multiple designs that allow us to provide 90% of the benefit to the user.

Said more plainly: If we assume that we want a blockchain that is secure, robust, and decentralized, then we cannot have both perfect determinism and high throughput. Praos sacrifices high throughput in return for perfect determinism. And Leios offers us the opportunity to soften that determinism in return for throughput. Exactly how much we soften this is an open design question that we, the Cardano Community, ultimately get to decide.

Duplication

It’s worth making a small aside here to observe that even without introducing an adversary, Leios (as described) has a simpler, related problem around duplicated effort. If you propagate transactions across the network, big chunks of the network will end up with the same, or very similar mempools. Thus, if two nodes produce input blocks concurrently, it’s highly likely that they will have largely the same contents. It doesn’t do much good to produce blocks concurrently if they’re just replicating the same work multiple times!

This is similar to but weaker than, the issue described above. Indeed, a transaction “conflicts” with itself exactly because it spends the same inputs! So while it doesn’t amplify the work done, duplicate blocks do waste the capacity of the network.

Ultimately, there is a diverse space of solutions to these problems that we’ve been exploring. Some of these we’re very early in the analysis process, some of these are not mutually exclusive, and the best approach may be a combination, and some of these only solve the problem under honest assumptions, and still need something further to address an adversary. Still, despite not having a clear and definitive recommendation for which of these is best or a complete understanding of the tradeoffs, I’ll walk through them to give you a look at the incredibly rich landscape we’re considering. We’d love to hear community feedback on these different choices!

Deduplication

The simplest solution to this problem, and to avoid both duplicated effort and duplicated storage, is to design the protocol to support “tombstoning”. The idea here is to design the block hashes in such a way that duplicate and conflicting transactions can be pruned from the protocol. A purely duplicate transaction doesn’t need to be validated twice (it is simply recognized as duplicated and skipped), nor does it need to be stored a second time on disk.

Similarly, we can employ the notion of a “tombstone” to prune conflicting transactions from the history and keep the permanent ongoing cost of honest conflicts low.

In such a strategy, the ID of the input block would be defined, not as the hash of all of the transactions concatenated together, but as the hash of the IDs of each transaction concatenated together. When a transaction conflicts and gets filtered from the ledger, then once it gets written to the immutable portion of the chain, it can be deleted, keeping only the transaction ID. You can think of this like a “tombstone”, marking a pruned transaction, where the transaction ID is the epitaph. Since the ID of the input block is defined by the hash of all the transaction IDs, you still have enough information to reproduce the input block hash.

When someone is syncing from a peer, they still need confirmation that the transaction was indeed conflicting with another transaction that eventually makes its way into a ranking block. To that end, the endorsement block records which transactions were conflicting. Since the endorsement block has a certificate from the majority of the stake on the network, this gives high confidence that the transaction was indeed in conflict and was safe to prune. Perhaps this serves as an “obituary”, confirming the validity of a tombstone.

These tombstones aren’t valid in the volatile region of the chain, while we’re still reaching consensus, since nodes still need to know whether to endorse the block. But they do allow you to prune the historical state, minimizing the ongoing costs. You simply defer validating an input block with tombstones until you see the endorsement block with the obituary. If one never materializes, that fork is invalid and you look for another peer.

This alone doesn’t resolve the problem with conflicts in the presence of an adversary, however. A malicious actor can still flood the network with invalid transactions, filling up throughput capacity on the network with conflicting transactions that don’t cost them anything. This denial of service attack means we need to look for other solutions as well.

Coloring

Another “honest party” friendly solution is the notion of transaction coloring. Each transaction is randomly (or even explicitly, to assist with transaction chaining), assigned a color. When an input block is produced, it also has a color, and only includes transactions of the same color. If a node runs out of transactions to include, it can deterministically derive another color and continue including transactions from that color.

This allows different nodes which produce input blocks concurrently to ensure that the contents of their blocks are shuffled, so we avoid wasted/duplicated effort.

It doesn’t, however, prevent an adversary from intentionally crafting conflicting transactions that have different colors. They can vary some small innocuous property of the transaction until the hash places it in a different color, and create an adversely high percentage of wasted effort and capacity for the network.

Sharding

One way to extend the “coloring” idea above to harden it against an adversary is to go further and apply the separation to the ledger itself. More commonly referred to as “sharding,” by dividing transactions into lanes that cannot conflict by construction, and then using these lanes for our concurrent production capacity, we eliminate the problem! This works even in a probabilistic form: even if we just make such conflicts rare, even under adversarial conditions the network can afford to absorb the extra work without forfeiting any fees from the conflicted transactions.

There’s a number of ways this can work in practice, but here’s one example:

- Each transaction output is optionally labeled with a “shard ID”

- Each transaction includes a collateral field, and each collateral input must come from the same shard

- Each input on a transaction must be either labeled with that same shard, or unlabeled

- Each input block is randomly assigned to a shard, and can only include transactions from that shard

This allows users to avoid losing fees: just ensure that if your transaction includes unlabeled inputs, you don’t sign any transaction that conflicts with that one. And if an adversary intentionally creates these conflicts, the ledger can forfeit those fees.

Unfortunately, there are major challenges in designing this correctly:

First, this dramatically complicates the ledger and the user experience. At the very least wallets need to manage these labeled outputs and avoid allowing the user to sign conflicting transactions. This is also a huge potential complexity cost to the ecosystem, and upgrading all of the indexers, libraries, transaction builders, dApps, wallets, and other infrastructure is no small undertaking!

Second, it is difficult to balance the number of shards, at least while they’re produced pseudorandomly. Low shard counts mean there’s a higher probability of concurrent conflicts. Increasing the number of shards reduces the probability that they will conflict, but also lengthens the average time between the same shard recurring, which means transaction latency goes up dramatically. And there doesn’t appear to be a sweet spot, only an uncomfortable compromise where you have to deal with the worst of both worlds.

We’re still exploring this design space. In particular, perhaps the pseudorandom production of input blocks isn’t as important, and we can resolve these problems with a predefined shard schedule. This doesn’t solve the ecosystem-level complexity but could allow the protocol to keep both conflicts and latency low.

At the extreme ends of throughput (1,000, 2,000, and 10,000 transactions per second), it’s likely that some form of sharding or mutual exclusion will be needed.

However, what the analysis above makes clear is that Cardano doesn’t need 1,000+ TPS to solve its sustainability problem. In the fullness of time, this may be necessary, but in the short term, a simpler model that allows us to go from ~5 TPS to ~100 TPS would be a dramatic upgrade! There are some exciting alternative designs we’ve been considering in this space.

Collateralization

Rather recently, the Leios team has been analyzing another design that is far simpler and seems very promising, at least at lower throughput targets. Importantly, those lower throughput targets still represent a dramatic upgrade for the Cardano chain and don’t preclude more complex solutions in the future.

The main insight stems from looking at why deterministic transactions are valuable to Cardano users, and why losing them is painful.

When a user submits a transaction on Ethereum and it fails, they lose the gas fees spent processing that transaction. This is painful because the user paid for a service they did not receive. It’s analogous to walking up to the barista at a Starbucks, tapping their card, and paying $6 dollars a latte, and then the barista tells them that too many people ordered lattes and the machine is broken, but not offering a refund. If the user really needs that transaction to happen, they need to retry, and may end up paying that failing transaction fee again! Finally, the times when these failures are most likely directly correlate with the urgency of those transactions: during a hyped launch, or market volatility threatening a liquidation, it is really important to the user (economically) that this transaction goes through.

On Cardano, deterministic transactions only solve half of that problem. In cases of high load, you may still be unable to get your transaction on chain, but at least you aren’t charged for them.

Leios, implemented naively, threatens to flip this: the chain has extreme capacity, but you may end up paying for a service that you didn’t receive.

What if we forfeit a small, bounded amount of determinism, but ensure that you get the service you paid for.

The main idea here is that, with input blocks produced by a VRF lottery, then even over rather short periods of time, there is a tight distribution of how many concurrent blocks might be produced. At an average of one input block per second, and an average diffusion time of 5 seconds, we might see 5 or 10 input blocks produced outside of each other’s light cones, but we likely will not see 100 or 1000 concurrent input blocks.

Thus, the maximum amount of inherent conflict an attacker can produce is bounded in the same way. If an input block is produced inside the light cone of another, it can avoid creating conflicts with that input block.

And so in practice, if a user puts some collateral at risk in case of conflicts, that collateral only needs to be as high as this potential throughput. Even if there are short bursts of higher concurrency that allow more conflicts than the collateral that is available to pay for it, this can’t be sustained by an adversary.

And, importantly, there’s a seemingly heretical choice buried in the sand here: what if, instead of forfeiting the collateral from the transactions that get filtered from the chain, you forfeit the collateral from the transaction that did impact the ledger? So, the winning transaction pays for the effort used to validate the losing transactions.

Technically, this violates Cardano’s deterministic transaction property. Now, instead of the two outcomes described above, there are three potential outcomes:

- Your transaction has no impact on the ledger whatsoever as if it had never been sent

- Your transaction gets included, you pay the normal fees, and it updates the ledger in the way you intended

- New: Your transaction gets included, and you pay elevated fees, and updates the ledger in the way you intended

The psychology of this is fundamentally different from what we typically refer to as failed transactions. In all cases, you only pay for the services you receive! Sure, you might pay more in fees, but at the very least you accomplished what you were trying to accomplish. It’s also fairer, in some sense, since the winner is the one who got the economic value out of the transaction. If someone avoided being liquidated for 20,000 ADA (or just made 20,000 ADA on a liquidation), they’re unlikely to mind paying a little bit extra in fees.

And remember, the risk of forfeiting this is only constrained to cases where two people are able to spend the same transaction output, which largely boils down to an adversary or shared script UTxOs.

The feasibility of this now entirely depends on how big that over-collateralization has to be. If there’s a risk that you lose 5000 ADA, nobody would transact again. But if you might end up paying 1 ADA in fees as opposed to 0.2 ADA in fees, then it may be an entirely reasonable design.

This is why I mentioned it’s likely a viable solution at lower throughputs, but becomes impractical at higher throughput. At 100 transactions per second, this is roughly 5-10 times multiple on the transaction fees. So, instead of paying 0.2 - 1 ADA in fees, you might pay 2 - 10 ADA in fees in rare cases.

However, at the upper end of throughput, in the thousands of transactions per second that Leios could support, this collateralization tends to become impractical, and we may need additional solutions beyond that. But, in the interest of solving the sustainability problem and ratcheting Cardano up to the next rung of the ladder, this represents an attractive, low-complication solution with interesting properties.

There is currently no consensus on which of these solutions is the best, or if this even represents the totality of the landscape, even within the Leios research team. We’re still looking at, simulating, tuning, and debating all of these designs. Indeed, as we bring new members onto the team and solicit community feedback, assumptions are being challenged every day that help us either reach a better understanding of the nature of the problems at high throughput or reveal new ideas that are worth exploring. We provide this deep insight into our process not to invite controversy, but to lay out, transparently, what the risks and challenges actually are, and dispel fear and dogma around what people think they might be.